Enterprise teams are eagerly embracing generative AI – yet they face a dilemma. On one hand, tools like ChatGPT promise huge productivity gains; on the other, they raise serious security and compliance risks. It’s no surprise that prominent companies including JPMorgan Chase, Apple, Verizon and others, have entirely blocked internal use of ChatGPT due to privacy and security concerns. But outright bans come at a cost: employees then turn to unsanctioned AI apps (“shadow AI”) to get their work done, often without IT’s knowledge. In fact, the share of employees using generative AI jumped from 74% to 96% in 2023–24, and 38% of employees admit to sharing sensitive work data with AI tools without employer approval. This article explains what a secure generative AI workspace is and why enterprises need one. We’ll explore common pain points – from data privacy to tool fragmentation – and outline features to look for so your team can innovate with AI safely and responsibly.

Why Enterprises Need a Secure AI Workspace

Generative AI’s rapid rise has left enterprise leaders grappling with how to enable its benefits without compromising on security, privacy or governance. Key pain points driving the need for a secure AI workspace include:

- Data Privacy & Confidentiality: AI models often require sending data to third‑party clouds, raising the risk of leaks. Companies have learned this the hard way – Samsung, for example, banned employees from using ChatGPT after discovering sensitive code was uploaded to it. A major concern was that data submitted to public AI services could not be retrieved or deleted and might even be seen by other users. Enterprises worry that confidential information (from source code to customer data) could inadvertently slip out via AI. In a Cisco survey, 48% of professionals admitted to entering non‑public company info into AI tools. A secure AI workspace is needed to prevent such inadvertent data exposure.

- Regulatory Compliance: Organisations in finance, healthcare, government and beyond face strict data regulations (GDPR, HIPAA, etc.). Using external AI services without safeguards can lead to non‑compliance. Shadow AI usage can easily violate data protection laws, potentially incurring fines up to €20 million or 4% of annual revenue under GDPR. For heavily regulated industries, any AI solution must ensure data residency, auditability and compliance controls. A secure AI workspace can enforce these – for example, by logging all AI interactions and preventing retention of personal data – so that teams can harness AI while staying within legal guardrails.

- Security & IP Protection: Generative AI introduces new security concerns like prompt injections (malicious inputs that make an AI divulge or do undesirable things) and the risk of AI revealing sensitive training data. Traditional cyber defences don’t monitor AI queries and responses. This gap has spawned a new class of “AI firewalls” designed to filter prompts and outputs, preventing misuse and data leakage. Without such measures, an employee might unknowingly paste proprietary code into a chatbot and the model’s response could incorporate it elsewhere. Insider misuse is also a risk – in one poll, 1 in 5 UK companies reported data leaks due to employees using GenAI tools. A secure AI workspace helps by acting as a sandbox: it can intercept unsafe requests or answers (like confidential data or toxic content) and block them, much like an email DLP or web firewall but for AI. This keeps intellectual property and customer data from leaking through AI channels.

- Fragmentation & Productivity Loss: The GenAI boom has led to a glut of tools – GPT‑4, Claude, Bard, Llama variants, countless specialised models – each with different strengths. For users, “too many options with too much overlap” leads to paralysis, as teams waste time juggling multiple AI accounts and subscriptions. We’re already seeing AI fragmentation: one team might use an AI writing assistant in Notion, another uses Microsoft’s Copilot in Office, while developers use an open‑source code model – none of which talk to each other or follow a unified policy. This siloed approach is inefficient and hard to manage. Employees often resort to whichever AI tool is handy (“shadow AI”), which “introduces unique concerns related to data management and decision-making” outside IT oversight. A secure AI workspace can consolidate AI access – providing one platform where users can tap multiple models and data sources. This not only improves productivity (no more hopping between apps) but also lets IT standardise user experience and governance across the organisation.

- Lack of Governance & Oversight: Most companies simply aren’t prepared to govern AI use. One study found that fewer than half (44%) of organisations have well-defined policies for generative AI. Without clear guidelines or monitoring, employees may misuse AI (even unintentionally) or rely on its outputs without review, causing serious mistakes. Generative AI can produce incorrect or biased results; if left unchecked, this could lead to bad decisions or reputational damage. The U.S. FTC Chair warned that AI tools can “turbo-charge fraud [and] automate discrimination” if used recklessly. A secure AI workspace gives management the needed oversight: administrators can set usage policies (e.g. disallowing certain data or queries), require human review of AI outputs in high‑stakes cases, and keep audit logs of all AI interactions. Robust logging and audit trails mean if an issue arises – say an employee tried to input a client’s personal data – it can be traced and addressed. In short, governance features turn AI from a wild west into a well‑monitored resource.

In summary, enterprises recognise that generative AI is the future – 83% of finance leaders say AI is crucial to their industry’s future – but valid fears around security, privacy and control are holding them back. A secure AI workspace directly tackles these pain points, enabling teams to adopt AI on their own terms rather than resorting to risky workarounds or missing out entirely.

What Is a Secure GenAI Workspace?

A secure generative AI workspace is an integrated environment (either cloud‑based in a private tenant, or on‑premises) that lets enterprise teams use AI tools with full confidence in security, privacy and compliance. Think of it as a walled garden for AI work: employees get the creative power of generative AI (from answering questions to drafting content or analysing data) but within a protected space controlled by the organisation. All inputs, outputs and usage stay under your company’s policies and visibility, rather than floating around on public AI servers.

Crucially, a secure AI workspace combines productivity features with governance features in one package. Users might interface with it like a familiar AI chatbot or assistant, but behind the scenes, the system is enforcing rules – much like how a company VPN or secure intranet works for web access. For example, the workspace may route all AI queries through an AI firewall that monitors for sensitive data or unsafe content. In essence, it acts as a smart intermediary between the user and AI models, filtering prompts and responses in real time to prevent policy violations. The organisation can typically integrate its knowledge bases or data sources so that answers are relevant but still secure. And unlike generic consumer AI apps, an enterprise workspace will not retain your data or use it to train models unless you allow it.

Secure AI workspaces are emerging as a key solution for enterprises wanting to unlock GenAI’s value without the usual risks. Tech companies like OpenAI, Google and Cohere have all acknowledged this need. OpenAI’s ChatGPT Enterprise, for instance, promises that customer prompts and data won’t be used for training models and that all conversations are encrypted with SOC 2 compliance. Cohere’s new platform, North, was explicitly launched as “a secure AI workspace that lets companies deploy AI while maintaining control over sensitive data”, even offering private cloud or on‑prem deployments for regulated industries. In short, the concept goes beyond just having an AI chatbot with a login – it’s about creating a safe, governed environment for all your team’s AI‑powered tasks, from brainstorming and writing to data analysis.

Key Features to Look For in a Secure AI Workspace

Not all AI solutions marketed as “secure” are equal. When evaluating a generative AI workspace for enterprise use, you should look for several critical capabilities and safeguards. Below are the key features a truly secure AI workspace should offer:

- Data Privacy and Isolation: Your data should remain your data. The platform must ensure that any information users input or generate stays within its control. This means no sharing of your prompts or outputs into public model training feeds, strict data encryption, and options for data residency (storing data in specific regions or on your own servers). The strongest solutions let you deploy the workspace in a private cloud VPC or even on‑premises. Also look for compliance certifications (ISO 27001, etc.) that indicate rigorous data security practices. Essentially, a secure workspace should give the same level of data protection as other enterprise SaaS apps (or better) – end‑to‑end encryption (both in transit and at rest) and clear assurances that your prompts or files will not leak out or be used to improve the provider’s AI model without permission.

- AI Firewall and Content Safeguards: One defining feature of these workspaces is a built‑in AI firewall or policy engine that governs the AI’s inputs/outputs. This is the safety net preventing both accidental and malicious incidents. It should include prompt filtering (blocking or sanitising prompts that contain forbidden information or known attack phrases) and response filtering (scrubbing any sensitive data or disallowed content from the AI’s answer before the user sees it). In practice, a generative AI firewall monitors all prompt/response traffic and enforces policies to prevent data leakage, misuse or toxicity. Advanced firewalls also detect things like prompt injection attempts or “jailbreak” exploits that try to make the model bypass safety rules. For instance, if a user inadvertently asks the AI to output something confidential (like personal identifiable information or proprietary code), the system should catch it and block or redact that part of the answer. Content moderation is another aspect – flagging or removing hateful, harassing or otherwise inappropriate outputs in line with company policies. In short, the workspace needs robust guardrails so the AI remains a helpful assistant and not a compliance nightmare.

- Multi‑Model Access and Flexibility: A big advantage of an AI workspace platform is the ability to access multiple AI models through one interface. Rather than being locked into a single model (and thus a single vendor or a one‑size‑fits‑all approach), the workspace should support a range of leading models – e.g. OpenAI’s GPT‑4, Anthropic’s Claude, perhaps open‑source LLMs or domain‑specific models – and let users choose or automatically use the best one for a task. This flexibility is crucial for future‑proofing and avoiding vendor lock‑in. Different models have different strengths (some may be better at coding, others at creative writing, some self‑hosted models might be required for ultra‑sensitive data). Your workspace should at least let you route queries to different models or swap out the underlying model service. In essence, look for a platform that is model‑agnostic – it acts as a unifying layer over many AI engines. This not only prevents fragmentation but also means your team can experiment freely and use the most appropriate AI solution as the technology evolves.

- Enterprise Identity & Access Control: Integration with your corporate single sign‑on (SSO) and identity management (Azure AD, Okta, etc.) is a must. You want to easily onboard and offboard users, and assign role‑based permissions. A secure AI workspace should allow admin control over who can access what – for instance, perhaps only certain approved users can use code‑generation features, or only specific departments can access particular data within prompts. It should log each user’s activity under their identity for auditing. Support for multi‑factor authentication and integration with existing Identity and Access Management (IAM) policies is important so that use of the AI workspace fits into your normal IT security regime. Essentially, the platform should behave like any enterprise software in terms of user management (unlike consumer AI tools, which are tied to personal accounts with no organisational oversight).

- Collaboration and Knowledge Integration: Since this is a “workspace,” it should facilitate teamwork and knowledge sharing in a controlled way. Look for features to organise content and AI interactions into projects or shared spaces that mirror your organisational structure. For example, multiple colleagues might collaborate on an AI‑assisted research report within a shared workspace, seeing each other’s AI queries and findings. The platform should allow document and data sharing internally without risk of exposure externally. Some workspaces provide a project‑based knowledge base, meaning you can feed relevant internal documents or context into a project so the AI can give more informed answers (all while keeping that data internal). A secure AI workspace should similarly allow integration with your internal knowledge sources (e.g. connecting your SharePoint or Confluence repository in read‑only mode for the AI to retrieve facts) under tight access controls. This way, the AI becomes far more useful for employees – providing real answers based on company knowledge, not just public training data – yet does so without copying that knowledge outside the company. Additionally, features like chat templates or reusable workflows can ensure consistency.

- Audit Logging and Compliance Support: Enterprises will need to demonstrate and document how AI is being used. A secure AI workspace should automatically keep a secure log of all user prompts and AI responses, timestamps, which model was used, and any interventions (like if the AI firewall blocked something). These logs are invaluable for compliance audits, incident investigations, or just quality control. If a regulator or client asks, “How do you ensure no customer data is being misused by AI?”, you should be able to show detailed records. Many solutions allow exporting logs or integrating them into your Security Information and Event Management (SIEM) tools. Also, look for compliance‑specific features: the ability to set data retention policies (e.g. auto‑delete AI session data after X days if required), features aligning with frameworks like NIST AI Risk Management or industry standards, and possibly certifications that the platform meets sector‑specific guidelines (for example, HIPAA compliance for healthcare data, or FINRA compliance for financial communications). In short, the workspace should make it easier to govern AI use in line with your existing compliance obligations, rather than introducing new gaps.

- User‑Friendly Interface and Integration into Workflow: All the security in the world won’t help if employees find the tool cumbersome and resort to shadow AI out of frustration. So, a good, secure AI workspace needs to be usable. That means a friendly chat‑style interface or other intuitive UI for interacting with the AI, and ideally integration into the tools where work already happens (such as plugins for popular software or a way to use the AI assistant in email, documents, Slack/Teams chats, etc.). For example, the workspace might offer a browser extension or integrations with Google Workspace and Microsoft Office so that users can invoke the AI helper while editing a document or drafting an email. Seamless integration and ease of use will drive adoption, which paradoxically also improves security, because if the approved tool is convenient, employees have less incentive to seek unsanctioned alternatives. In evaluating solutions, consider running a pilot with end‑users to ensure the AI workspace actually improves their workflow (e.g. by saving time on tasks like content creation, research, coding assistance, etc.) without too much friction.

In summary, an enterprise‑grade GenAI workspace should cover the three C’s: Control (over data, access and usage), Compliance (with security and legal requirements), and Convenience (so that employees can be more productive, not less). If any one of these is lacking, the solution may fail to actually solve the initial problem (for example, a super‑secure tool that nobody wants to use, or a convenient AI tool that still leaks data). Keep these features in mind as a checklist when comparing options.

Comparing Solutions and Approaches

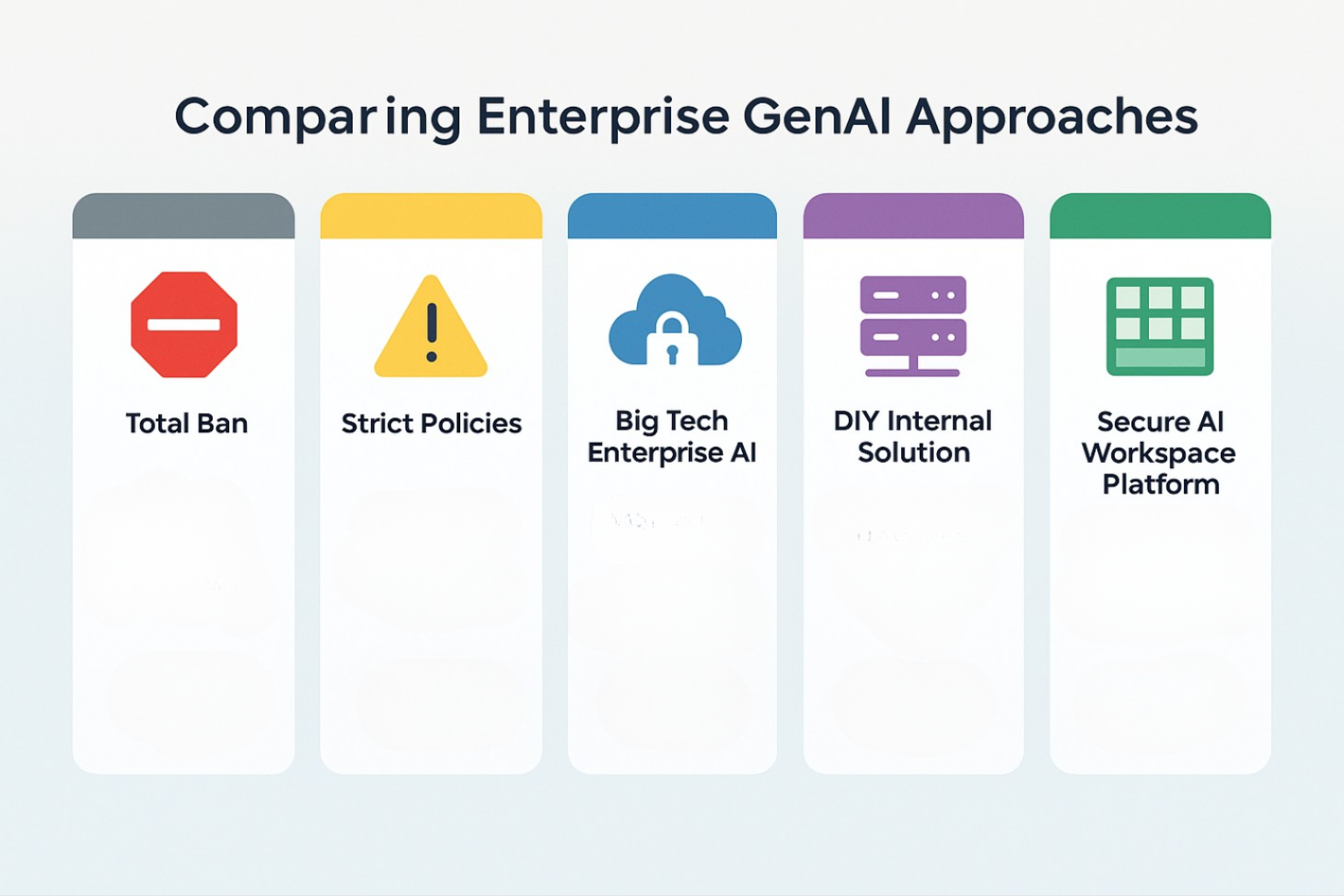

Enterprise decision‑makers today have a few paths to enable generative AI, each with pros and cons. Understanding the landscape will help illustrate why the dedicated “secure workspace” model has gained traction:

- Do Nothing / Total Ban: As mentioned, some firms reacted to AI risks by forbidding employees from using tools like ChatGPT. This approach (seen at companies like JPMorgan, Apple, and others) does eliminate immediate leakage risks, but it’s not sustainable. Employees may feel left behind and will likely find workarounds (using AI on personal devices or accounts – the shadow AI problem). Bans sacrifice the productivity and innovation gains that come from AI assistance, essentially putting the company at a competitive disadvantage in the long run. It’s a stopgap at best, as even Samsung, which temporarily banned generative AI, stated that it was working on measures to “create a secure environment for safely using generative AI” internally. In other words, outright bans are forcing the question: how do we safely say “yes” to AI?

- Allow Use with Strict Policies: Another approach is to permit use of certain AI tools but rely on trust and guidelines (e.g. “you may use ChatGPT but never input client data or any confidential info”). While better than a ban, this is difficult to enforce. As data shows, many employees either ignore or misunderstand such policies – recall that 57% of professionals admitted to inputting confidential information into AI tools. Without technical controls, it only takes one mistake to cause a breach. Moreover, manual policies don’t mitigate other risks like incorrect outputs or a lack of oversight on how AI is used. So this approach leaves a lot of exposure and essentially depends on every single employee’s caution. It’s also hard to scale; as usage grows, compliance teams struggle to monitor what questions are being asked or what content is generated. Thus, many organisations that started with “use AI carefully” guidelines are now looking for more systematic solutions.

- Big Tech Enterprise AI Offerings: Recognising the demand, major AI providers launched enterprise‑grade versions of their AI. ChatGPT Enterprise (OpenAI) and Microsoft’s Azure OpenAI service are prime examples. These typically address some privacy issues – e.g. OpenAI’s enterprise service assures that by default “we do not train on your business data or conversations” and provides an admin console and encryption. Microsoft’s Azure OpenAI allows hosting OpenAI models in the customer’s Azure environment, adding enterprise security layers. Similarly, Google’s generative AI offerings for Google Workspace assure customers that data from Gmail/Docs AI features isn’t used to train models and is secured. These options are appealing, especially if you are already invested in those ecosystems. For instance, if your company lives in Microsoft 365, enabling Copilot for your tenants’ documents and emails keeps data within your Microsoft cloud tenant. The limitation, however, is ecosystem lock‑in and model choice. ChatGPT Enterprise gives you GPT‑4 (and maybe other OpenAI models) but not competitors; Microsoft’s Copilot largely uses OpenAI models via Azure; Google’s uses their own models. You might still end up with different AI assistants in different apps and no single point of control. Also, these solutions might not cover all use cases – e.g. they might excel at text generation but not code, or vice versa, leading teams to seek additional tools.

- DIY Internal Solutions: For the most control, some organisations build their own internal AI systems. This could mean deploying open‑source LLMs on company servers, or developing custom chatbots for specific functions (fine‑tuned on internal data). Big banks and firms with large IT budgets have gone this route – for example, JPMorgan reportedly developed an in‑house ChatGPT‑like tool called “IndexGPT” and an AI assistant for its advisors, to avoid sending data externally. The advantage here is maximum data control and customisation. However, the barriers are significant: it requires AI engineering talent, infrastructure (potentially expensive GPU clusters), and ongoing maintenance to keep models updated and safe. Open‑source models can be hosted securely, but one must still implement all the guardrails and interfaces around them – effectively building your own secure AI workspace from scratch. Most companies outside the tech giants or top banks find this too resource‑intensive. It’s also risky because the AI field moves fast; a DIY solution could become obsolete or insecure if not continually improved. In short, rolling your own is feasible for some (and you retain ownership of everything), but for many it’s not practical compared to leveraging a vendor solution that specialises in this domain.

- Dedicated Secure AI Workspace Platforms: This is where solutions like CoSpaceGPT, Cohere North, PromptOwl, and others come in. They position themselves as all‑in‑one secure AI platforms for teams, combining the best of the above approaches. Typically, they offer multi‑model flexibility, strong security/governance built in, and user‑friendly collaboration features. To illustrate, CoSpaceGPT is one such platform that we (Cloudsine) have developed to meet enterprise needs. It brings together multiple leading AI models in one secure hub, with built‑in safety guardrails and team collaboration tools. In CoSpaceGPT, users can seamlessly switch between models like GPT‑4, Claude, Google’s models, and even upcoming ones like Meta’s, depending on what’s needed. Throughout, it can automatically redact sensitive information in prompts or outputs and flag anything that violates company policy. Furthermore, CoSpaceGPT lets teams organise their chats, documents and AI‑generated content into shared projects, each with its own context (almost like having a secure, AI‑powered project workspace). The goal is to prevent the fragmentation we discussed: instead of AI being used ad hoc all over the place, everything funnels through one coordinated platform that “centralises AI interactions in an organised space.” And importantly, we designed it such that what happens in your workspace stays in your workspace – data isn’t used to train our models and can even be deployed on‑prem for total control, if needed. CoSpaceGPT is an example among a growing field of solutions trying to strike the right balance between usability and security.

Each organisation will need to compare these approaches based on factors like their regulatory environment, budget, and internal expertise. Many are finding that a dedicated secure workspace platform offers the quickest and most comprehensive way to enable AI safely. It essentially shortcuts the journey: rather than each company reinventing AI safety and integration on its own, the platform comes with those capabilities out of the box. That said, evaluating vendors is important – ensure they tick the feature boxes we outlined (data privacy, robust guardrails, etc.) and consider pilot testing with a small team.

One practical tip when comparing solutions: involve both IT/security and the actual end‑users. The security team will vet things like encryption and compliance, while end‑users can tell you if the AI actually helps in their day‑to‑day tasks. A secure AI workspace only delivers value if it is both trusted and widely adopted by your team.

Conclusion: Empowering Innovation Without Compromise

Generative AI is often described as a “wild west” in the enterprise right now – full of opportunity, yet fraught with risk if unmanaged. A secure GenAI workspace is essentially about bringing law and order to this frontier so that employees can innovate freely without putting the company in jeopardy. By providing a safe, governed environment for AI‑powered work, enterprises can turn generative AI from a shadowy free‑for‑all into a reliable business tool. The payoff is significant: teams get to automate tedious tasks, generate insights and content faster, and augment their creativity, all under the watchful eye of appropriate controls.

To recap, enterprises need a secure AI workspace because it addresses the core concerns holding back AI adoption – data leaks, compliance breaches, inconsistent tool use, and lack of oversight. We no longer have to choose between security and innovation. With the right platform, it’s possible to have both. When implemented correctly, a secure AI workspace becomes an enabler of digital transformation. Employees feel confident using AI on real business problems (since they know it’s an approved, safe tool), and leadership gains visibility into how AI is being used and the value it delivers. It fosters a culture of responsible AI adoption – something increasingly important not just internally, but to customers and regulators who want to know that your company uses AI ethically and safely.

At CoSpaceGPT, we’ve seen firsthand how a well‑designed AI workspace can transform AI usage. Teams that once tip‑toed around ChatGPT (or avoided it entirely) can now collaborate with AI daily – brainstorming proposals, generating code snippets, answering client queries – all within a secure sandbox that protects their data and monitors outputs. And they do so with multiple AI models at their fingertips, without jumping between dozens of apps. Our approach, and others in this space, will undoubtedly evolve as AI technology advances. But the fundamental principle remains: enterprises need an AI environment they can trust.